接口文档

CZLOapi的接口通用此方式,即OPENAI的接口方式。

调用不同接口,只需要更换model参数即可。

发出请求

您可以将以下命令粘贴到终端中以运行您的第一个 API 请求。确保替换$OPENAI_API_KEY为您的秘密 API 密钥。

curl https://oapi.czl.net/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "Say this is a test!"}],

"temperature": 0.7

}'此请求查询模型以完成以“ Say this is a test gpt-3.5-turbo ”提示开始的文本。您应该收到类似于以下内容的响应:

{

"id":"chatcmpl-abc123",

"object":"chat.completion",

"created":1677858242,

"model":"gpt-3.5-turbo-0301",

"usage":{

"prompt_tokens":13,

"completion_tokens":7,

"total_tokens":20

},

"choices":[

{

"message":{

"role":"assistant",

"content":"\n\nThis is a test!"

},

"finish_reason":"stop",

"index":0

}

]

}现在您已经生成了第一个聊天完成。我们可以看到finish_reasonisstop这意味着 API 返回了模型生成的完整完成。在上面的请求中,我们只生成了一条消息,但您可以设置参数n来生成多条消息选择。

模型

列出并描述 API 中可用的各种模型。 您可以参考模型文档来了解可用的模型以及它们之间的差异。

模型列表

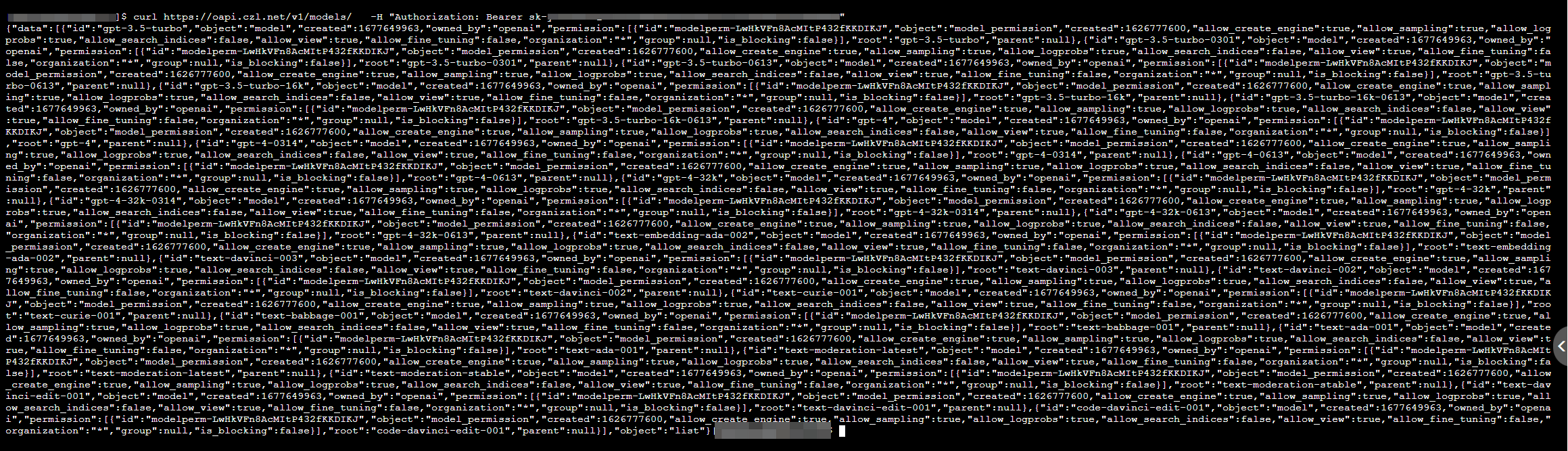

GET https://oapi.czl.net/v1/models/

列出当前可用的型号,并提供每个型号的基本信息,例如所有者和可用性。

Example request

curl https://api.openai.com/v1/models \

-H "Authorization: Bearer $OPENAI_API_KEY"Response

{

"data": [{

"id": "gpt-3.5-turbo",

"object": "model",

"created": 1677649963,

"owned_by": "openai",

"permission": [{

"id": "modelperm-LwHkVFn8AcMItP432fKKDIKJ",

"object": "model_permission",

"created": 1626777600,

"allow_create_engine": true,

"allow_sampling": true,

"allow_logprobs": true,

"allow_search_indices": false,

"allow_view": true,

"allow_fine_tuning": false,

"organization": "*",

"group": null,

"is_blocking": false

}],

"root": "gpt-3.5-turbo",

"parent": null

}, {

"id": "gpt-3.5-turbo-16k",

...

}],

"root": "gpt-3.5-turbo-16k",

"parent": null

}, {

"id": "gpt-3.5-turbo-16k-0613",

...

}],

"root": "gpt-3.5-turbo-16k-0613",

"parent": null

}, {

"id": "gpt-4",

...

}],

"root": "gpt-4",

"parent": null

}, {

"id": "gpt-4-0613",

"object": "model",

"created": 1677649963,

"owned_by": "openai",

"permission": [{

"id": "modelperm-LwHkVFn8AcMItP432fKKDIKJ",

"object": "model_permission",

...

}],

"root": "gpt-4-0613",

"parent": null

},

......

{

"id": "code-davinci-edit-001",

......

"root": "code-davinci-edit-001",

"parent": null

}],

"object": "list"

}

检索模型

GET https://oapi.czl.net/v1/models/{model}

检索模型实例,提供有关模型的基本信息,例如所有者和权限。

路径参数

model string Required

The ID of the model to use for this request

Example request

curl https://oapi.czl.net/v1/models/gpt-4 \

-H "Authorization: Bearer $OPENAI_API_KEY"Response

{

"id": "gpt-4",

"object": "model",

"created": 1677649963,

"owned_by": "openai",

"permission": [...],

"root": "gpt-4",

"parent": null

}聊天

给定包含对话的消息列表,模型将返回响应。

Create chat completion

POST https://api.openai.com/v1/chat/completions

Creates a model response for the given chat conversation.

Request body

model string Required

ID of the model to use. See the model endpoint compatibility table for details on which models work with the Chat API.

messages array Required

A list of messages comprising the conversation so far. Example Python code.

| 参数 | 类型 | 是否必需 | 描述 |

|---|---|---|---|

| role | 字符串 | 是 | 消息作者的角色。可选值为system、user、assistant或function。 |

| content | 字符串 | 否 | 消息的内容。除了带有函数调用的assistant消息外,其他消息都需要提供内容。 |

| name | 字符串 | 否 | 作者的名称。如果角色为function,则需要提供名称,且应该是内容中包含的函数的名称。名称只能包含a-z、A-Z、0-9和下划线,最大长度为64个字符。 |

| function_call | 对象 | 否 | 应该调用的函数的名称和参数,由模型生成。 |

functions array Optional

A list of functions the model may generate JSON inputs for.

| 参数 | 类型 | 是否必需 | 描述 |

|---|---|---|---|

| name | 字符串 | 是 | 要调用的函数的名称。名称只能包含a-z、A-Z、0-9,或包含下划线和连字符,最大长度为64个字符。 |

| description | 字符串 | 否 | 函数的描述信息。 |

| parameters | 对象 | 否 | 函数接受的参数,以JSON Schema对象描述。参考指南中的示例,以及JSON Schema参考文档了解格式的详细信息。 |

Example request

curl https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [{"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "Hello!"}]

}'Parameters

{

"model": "gpt-3.5-turbo",

"messages": [{"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "Hello!"}]

}Response

{

"id": "chatcmpl-123",

"object": "chat.completion",

"created": 1677652288,

"choices": [{

"index": 0,

"message": {

"role": "assistant",

"content": "\n\nHello there, how may I assist you today?",

},

"finish_reason": "stop"

}],

"usage": {

"prompt_tokens": 9,

"completion_tokens": 12,

"total_tokens": 21

}

}其他参数

function_call

- Type: string or object

- Optional

- Controls how the model responds to function calls.

- Options:

- "none": The model does not call a function and responds to the end-user.

- "auto": The model can choose between responding to the end-user or calling a function.

- {"name": "my_function"}: The model is forced to call the specified function.

- Default behavior:

- "none" if there are no functions present.

- "auto" if functions are present.

temperature

- Type: number

- Optional

- Defaults to 1

- Determines the sampling temperature, ranging from 0 to 2.

- Higher values like 0.8 make the output more random.

- Lower values like 0.2 make the output more focused and deterministic.

- We generally recommend adjusting this or top_p but not both.

top_p

- Type: number

- Optional

- Defaults to 1

- An alternative to sampling with temperature.

- Determines the threshold probability mass.

- Only the tokens comprising the top_p probability mass are considered.

- For example, a value of 0.1 means only the tokens with the top 10% probability mass are considered.

- We generally recommend adjusting this or temperature but not both.

n

- Type: integer

- Optional

- Defaults to 1

- Determines how many chat completion choices to generate for each input message.

stream

- Type: boolean

- Optional

- Defaults to false

- If set to true, partial message deltas will be sent (like in ChatGPT).

- Tokens will be sent as data-only server-sent events as they become available.

- The stream is terminated by a data: [DONE] message.

stop

- Type: string or array

- Optional

- Defaults to null

- Specifies up to 4 sequences where the API will stop generating further tokens.

max_tokens

- Type: integer

- Optional

- Defaults to inf

- Specifies the maximum number of tokens to generate in the chat completion.

- The total length of input tokens and generated tokens is limited by the model's context length.

presence_penalty

- Type: number

- Optional

- Defaults to 0

- Number between -2.0 and 2.0.

- Positive values penalize new tokens based on whether they appear in the text so far, increasing the model's likelihood to talk about new topics.

frequency_penalty

- Type: number

- Optional

- Defaults to 0

- Number between -2.0 and 2.0.

- Positive values penalize new tokens based on their existing frequency in the text so far, decreasing the model's likelihood to repeat the same line verbatim.

logit_bias

- Type: map

- Optional

- Defaults to null

- Modifies the likelihood of specified tokens appearing in the completion.

- Accepts a JSON object that maps tokens (specified by their token ID in the tokenizer) to an associated bias value from -100 to 100.

- Mathematically, the bias is added to the logits generated by the model prior to sampling.

user

- Type: string

- Optional

- A unique identifier representing your end-user, which can help OpenAI to monitor and detect abuse.